June 26, 2025

Day 23 – Concluding the Codebase

What I Learned

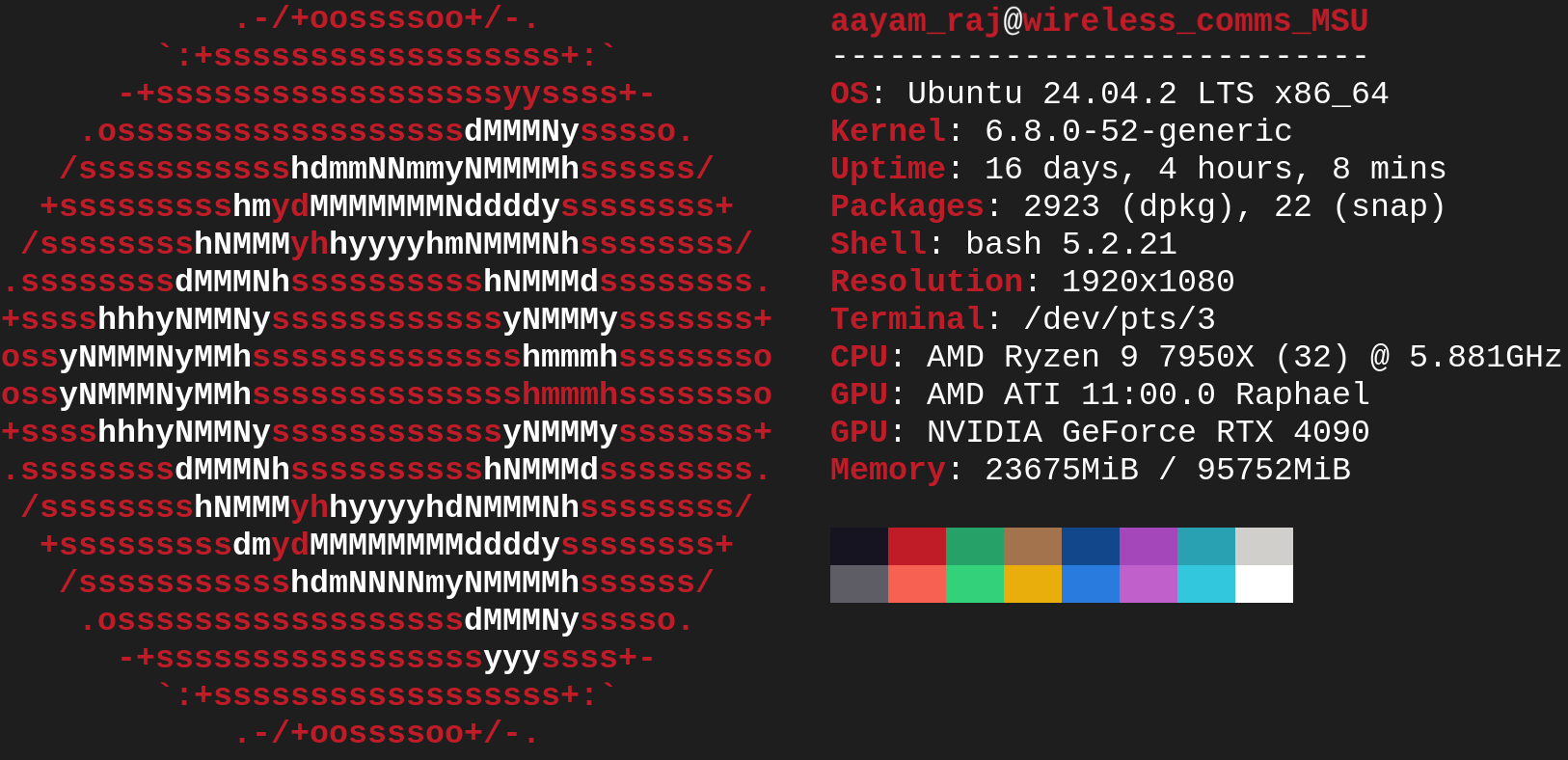

After gaining access to my workstation in the wireless communications research lab at MSState, I was so excited that I worked the whole night to train the model.

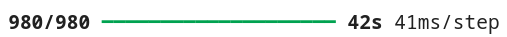

Training on the RTX 4090 was extremely fast, taking just 42 secs on average per epoch, compared to around 16 mins on the CBEIS lab PC.

I completed the training, which usually takes the entire workday, in under 15 mins (partially thanks to the early stopping callback that halted the training at the 18th epoch). I also finished the Optuna code, which I will use to fine-tune the no. of epochs, learning rate, optimizer types, batch size, and dropout rate. While using Optuna takes longer even with the 4090, I plan to train it over the weekend. I have concluded my codebase, and the only changes that might be needed are adjustments to the layers in the ensemble model. Beyond that, my full focus will be on tuning the hyperparameters.

Blockers

No issues faced.

Reflection

The coming days will be exciting as I begin fine-tuning hyperparameters, preparing for mid-summer presentation and start writing the research paper. I am very thankful to my PhD supervisor, Josh, for allowing me to use my workstation for external work. With this powerful machine, I can run the model much more efficiently—up to 136x faster than when I first began training the model with Google Colab.