June 17, 2025

Day 16 – Exploring data augmentation and hyperparam tuning

What I Learned

Today, I arrived at the lab quite early and found Pelumi and Michelle working on swapping computers from another lab to ours since Michelle can’t always use the admin’s PC. I helped her set up Anaconda, Jupyter, and the CUDA toolkit (necessary for using the GPU) on her PC. After that, I moved to my workspace and began watching a video on hyperparameter tuning. I found one video on YouTube that used keras_tuner, but this method is exhaustive and complicated. I am looking for something that uses Bayesian optimization, like Optuna, but unfortunately, I didn’t find many tutorials teaching Optuna for TensorFlow and Keras, which we are using. Even if there are such tutorials, they mostly focus on traditional machine learning algos and not necessarily deep learning, which is quite different. I have also restarted my previous ensemble codebase from scratch to add some enhancements.

Blockers

No issues faced.

Reflection

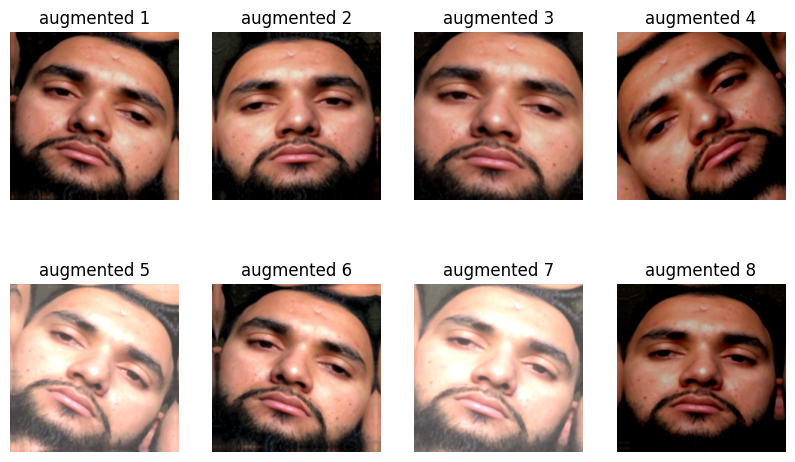

Today’s day went okay. I spent a good amount of time helping my peers troubleshoot their issues and watching some new videos on topics that I am assigned to handle. I noticed that many people in team video discussions were asking how we are going to compensate for image training in low light. I think this can be addressed with data augmentation using the RandomBrightness and RandomContrast functions from the TensorFlow Keras layer submodule. This approach should improve our model’s performance, especially if we train it on a substantial number of dark images or images that mimic nighttime. I am also excited to meet our high school teacher participant tomorrow.