June 13, 2025

Day 14 – Happy Maroon Friday!!

What I Learned

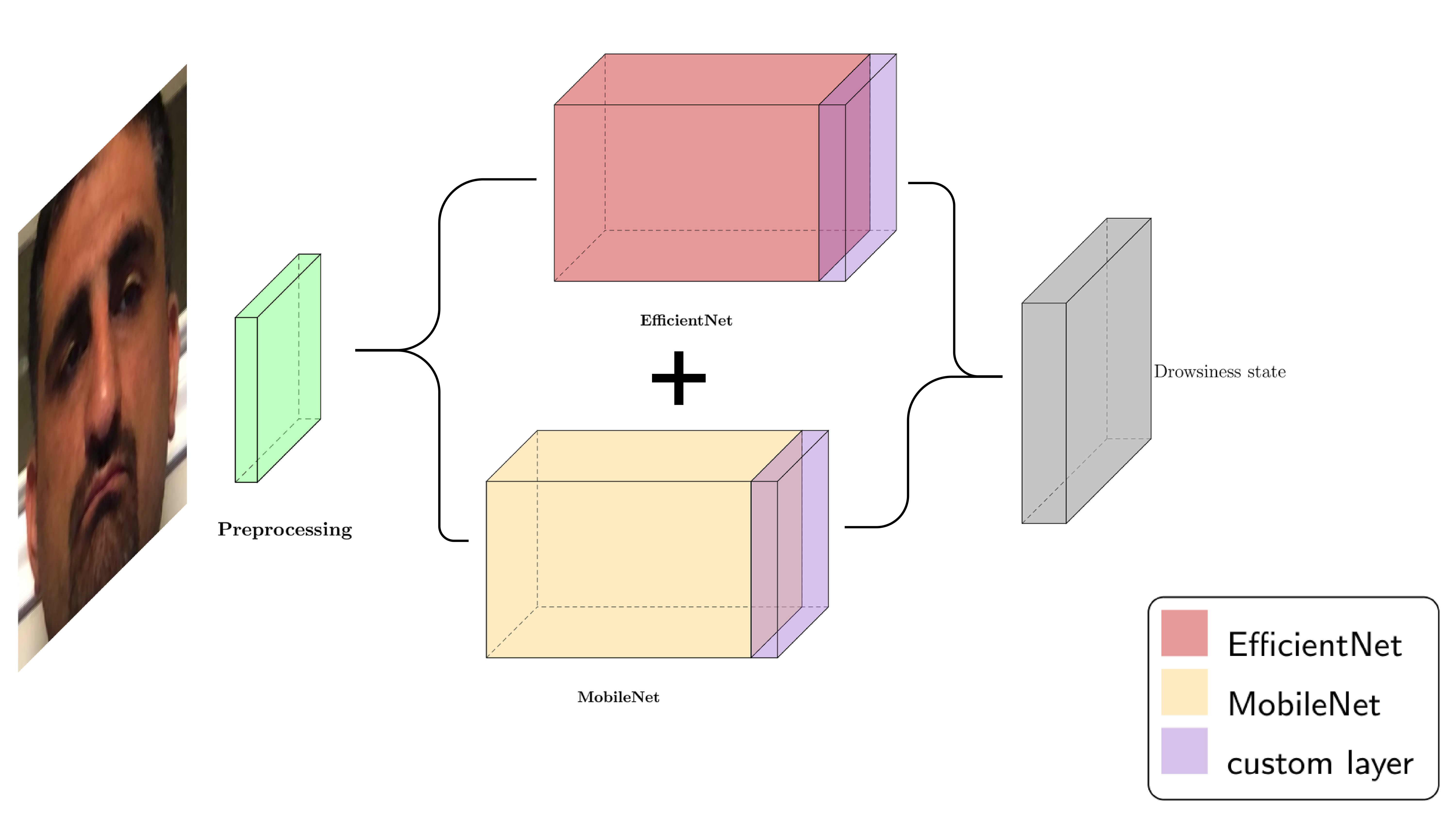

Pelumi briefed us on the goals for next week. The main goals are scaling up the models, optimizing the models, and fine-tuning the hyperparameters. I introduced Pelumi to some hyperparameter tuning techniques like grid search and Optuna, a hyperparameter optimization framework. I explained what each of them does since he was new to them, and it seems he liked the idea of using Optuna in our project. Pelumi then assigned each of us tasks for next week: Yusrat will handle DenseNet, Michelle will handle MobileNet, Ignatius will look after EfficientNet, and I will take care of the overall ensemble architecture.

Blockers

No issues faced.

Reflection

For me, every Friday is a good day. We started the day by meeting Pelumi and briefing him on our findings regarding the types of architectures we studied over the week. I presented on MobileNetv2, which is a lightweight CNN architecture. Although it is lightweight, I concluded that it is not a good fit for our system, as it has comparatively lower accuracy than other models we studied, like EfficientNet and DenseNet. It also has a limitation: while it works well on general benchmarks, it will not perform well on highly specialized tasks like ours. Therefore, I suggested to Pelumi and the team that we move on to other architectures.

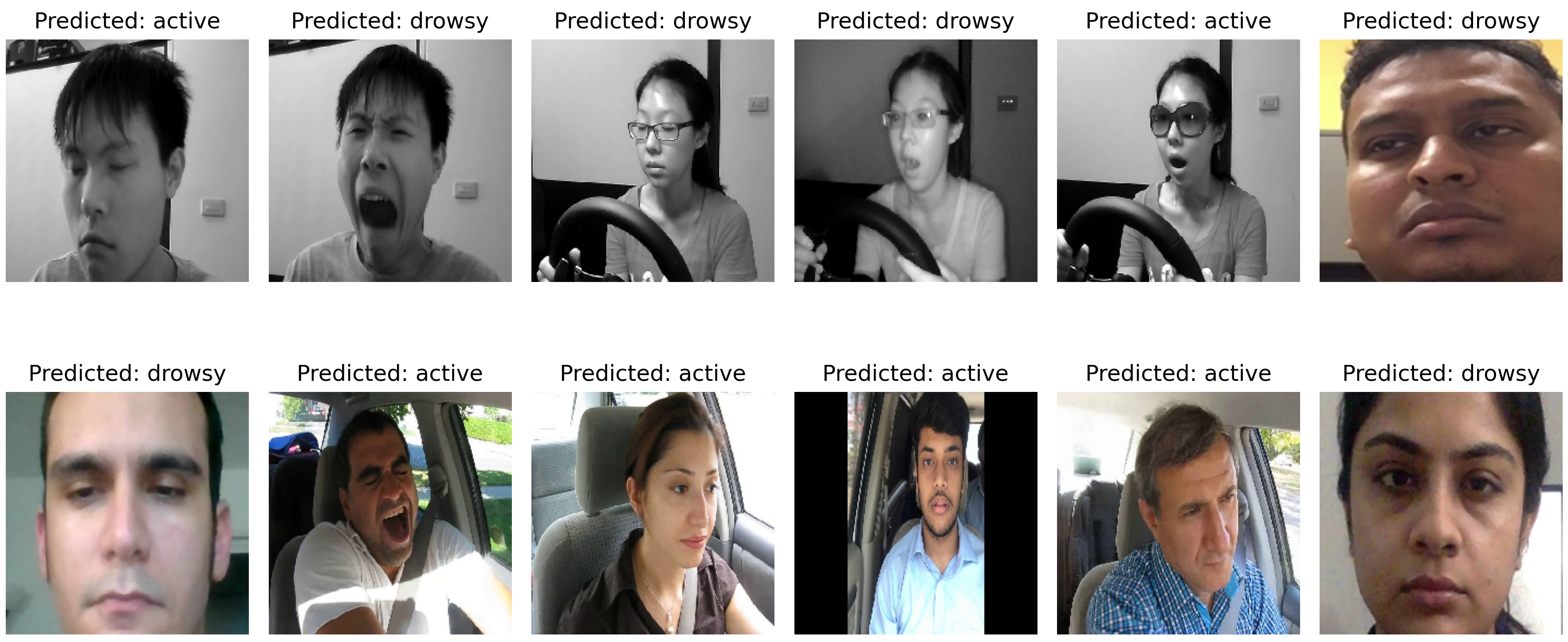

I also left my PC training overnight and got the results. Early stopping halted the training at the 27th epoch, meaning that going further would not have improved the model’s accuracy. I evaluated the metrics from my ensemble model, and they were surprisingly better than I expected.

I showed these results to Pelumi and the team, and they were impressed with it. We then started working on the weekly team presentation slides and video.