June 11, 2025

Day 12 – Coding contd.

What I Learned

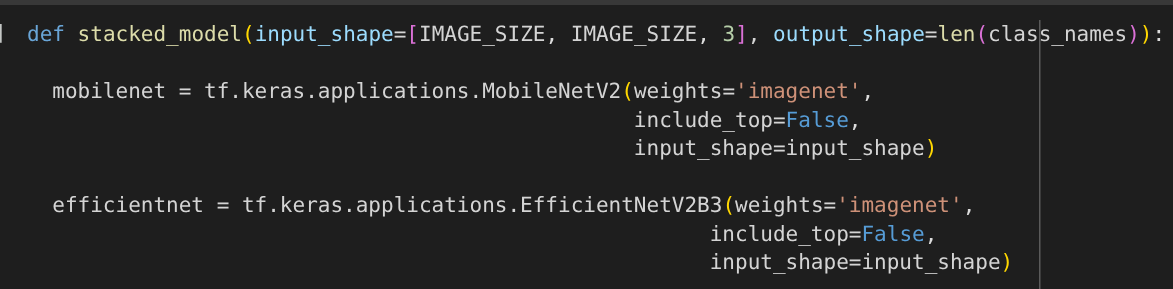

Today went slightly unpleasant. I finally finished coding my ensemble model, using MobileNetV2 and EfficientNetV2B3 (switched from EfficientNetV2L due to its larger param count).

When I started the training on Google Colab, I found out that I was already out of free GPU hours. I also learned that this department doesn’t have a dedicated GPU server, so my team and I were essentially wasting time training models that would take 50+ hrs to finish.

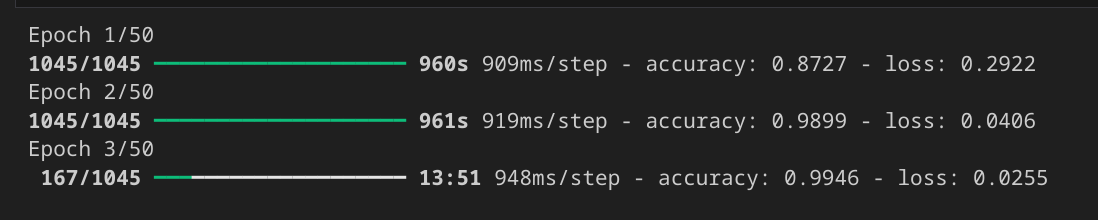

I moved our original dataset to my local machine and started the training. I found that training on my PC is significantly faster than on Google Colab. Google Colab’s CPU takes 1 hr 49 mins for a single epoch, the T4 GPU takes ~35 mins, and my machine takes ~16 mins. This is surprising, given that my PC has a modest AMD Ryzen 6500 GPU. The training will still take 13 hrs since I’m setting the epoch to 50, but I’m hoping the early stopping callback will halt the training slightly earlier; we’ll see.

Apart from the code, I also watched a couple of videos on MobileNet and DenseNet, and one video on 1x1 convolution by our own Andrew Ng <3

Blockers

No issues faced.

Reflection

As I mentioned earlier, my whole team and I had exhausted our free GPU hours on Google Colab, so we were essentially letting the model train on the CPU while doing other tasks on the side. It was disappointing to know that we don’t have access to a GPU server in this department. I wanted to ssh into the rackstation in my research lab back at MSState, but it seems someone switched it off, so I couldn’t log in. That rackstation has an A100 GPU, which would have been very useful to me today.

However, the day didn’t turn out to be totally unproductive, as I watched some additional videos on MobileNet. We are tasked with presenting an overview, strengths, and weaknesses of each of the models (MobileNet(V1 and V2), DenseNet121, EfficientNetB0(V1 and V2)) to Pelumi on Friday, so I also started preparing the slides.